Francesca Pianosi, University of Bristol

Thorsten Wagener, University of Bristol

THE CHALLENGE

Our goal was to provide a suite of tools and methods to support uncertainty and risk assessment in natural hazards – to improve the transparency and defensibility of risk management decisions.

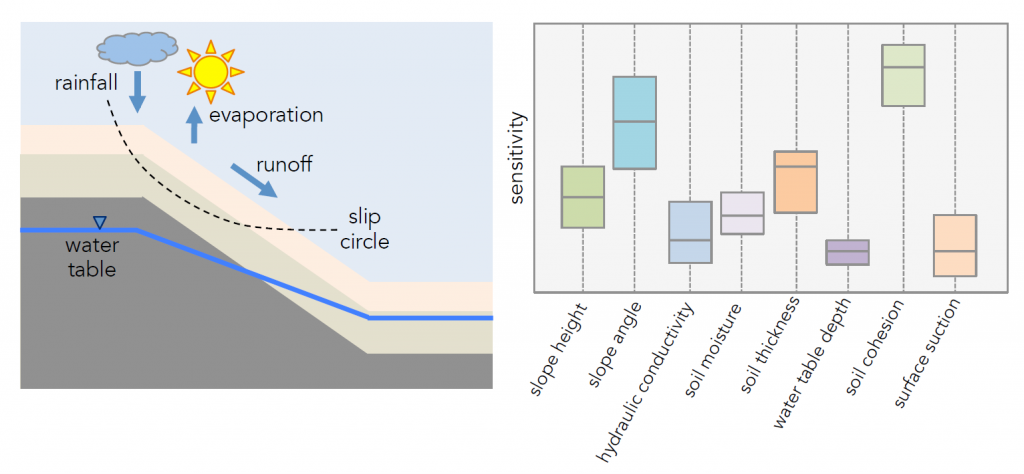

Good modelling practice requires an assessment of the confidence in a model. Predictions arising from numerical models are affected by potentially large uncertainties due to a range of factors, such as observation errors and uncertainty in model parameters and structure. Depending on the model application, such uncertainties can undermine the robustness and credibility of the modelling results to the extent that the usefulness of environmental models for supporting decision-making must called into question. Global Sensitivity Analysis (GSA) provides a valuable tool for both model developers and users to quantify the uncertainty in model outputs, estimate the relative contribution of the different input factors to such uncertainty, and thus prioritize efforts for its reduction.

Sensitivity analysis is recommended practice when model predictions are the substance of regulatory analysis or policy appraisal.

WHAT WAS ACHIEVED

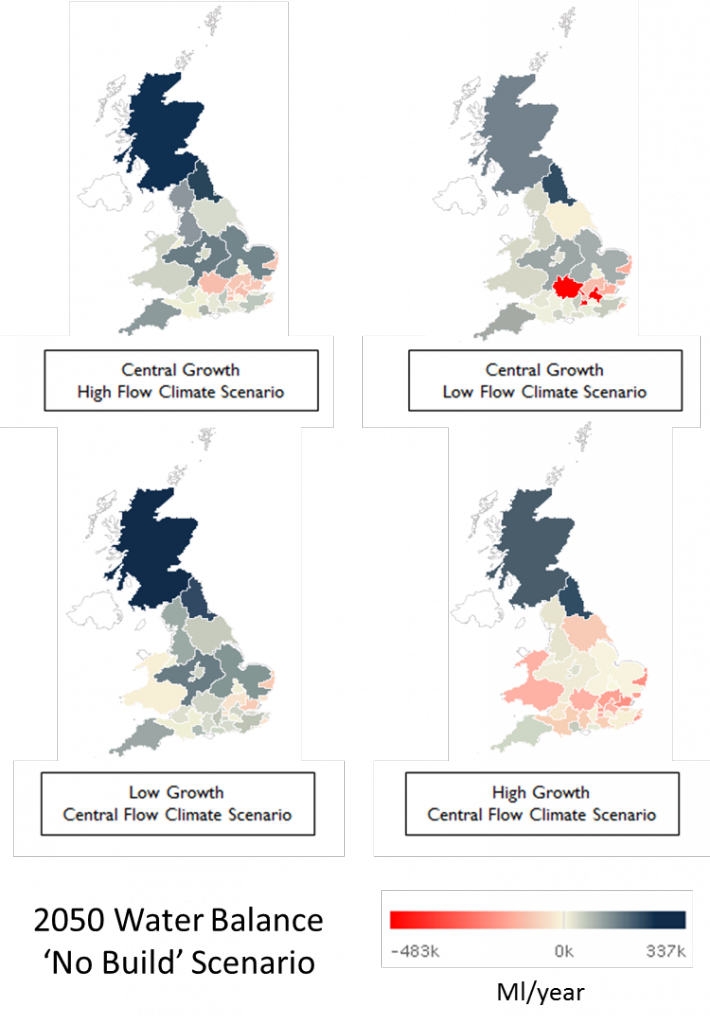

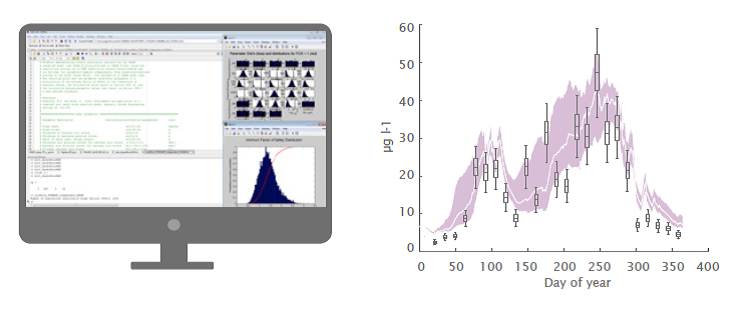

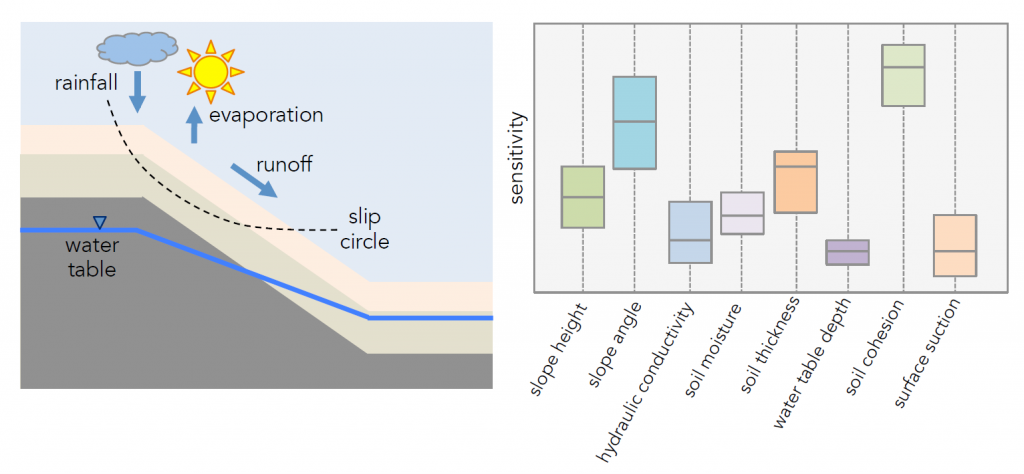

The SAFE tool allows those who use and develop models – in academia, government and industry – to investigate the potential for model simplification by identifying model components that have little impact on model behaviour and therefore can be omitted in a simplified version of the model. It also supports model calibration by identifying the parameters that most influence model accuracy (i.e. the ability to reproduce observations) and therefore need to be properly calibrated. Further, SAFE supports model validation by checking consistency between the model response and our understanding of the system represented by the model, and model debugging by identifying combinations of input factors (model parameters, initial or boundary conditions, input data, etc.) that cause the model to fail. Finally, SAFE enables the user to identify the major sources of model uncertainty e.g. errors in input data or uncertainty in parameters, and thus to prioritize efforts for uncertainty reduction.

SAFE currently has over 300 academic users in a wide range of application areas. It is being trialled by industrial users, including catastrophe risk modelling companies, environmental consultants, technology and manufacturing companies.

“The SAFE toolbox, and the thinking behind it, are helping us to get more out of the models we use in assessing strategies for cost-effective river water quality monitoring, ultimately supporting decisions about catchment management to meet the requirements of the European Water Framework Directive” (Rob Lamb, JBA)

HOW WE DID IT

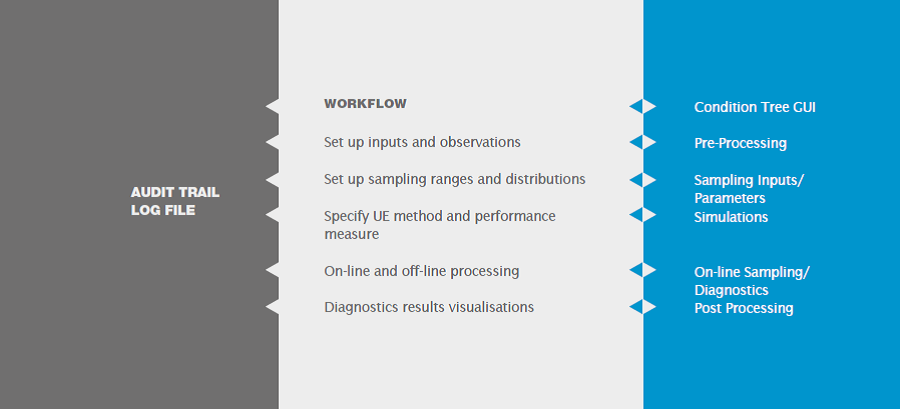

The SAFE Toolbox provides a set of functions to perform Global Sensitivity Analysis in Matlab (or equivalently in the free software Octave) and R.

GSA methods implemented in SAFE:

- Elementary Effects Test (or method of Morris)

- Regional Sensitivity Analysis (RSA)

- Variance-Based Sensitivity Analysis (Sobol’)

- Fourier Amplitude Sensitivity Test (FAST)

- DYNamic Identification Analysis (DYNIA)

- Density-based PAWN Sensitivity Analysis (Pianosi and Wagener, 2015)

The unique features of SAFE:

- Modular structure to facilitate interactions with other computing environments

- Set of functions to assess the robustness and convergence of sensitivity indices

- Several visualization tools to investigate and communicate GSA results

- Lots of comments in the code and workflow examples to get started

A general introduction to the rationale and architecture of SAFE is given in Pianosi et al. (2015).

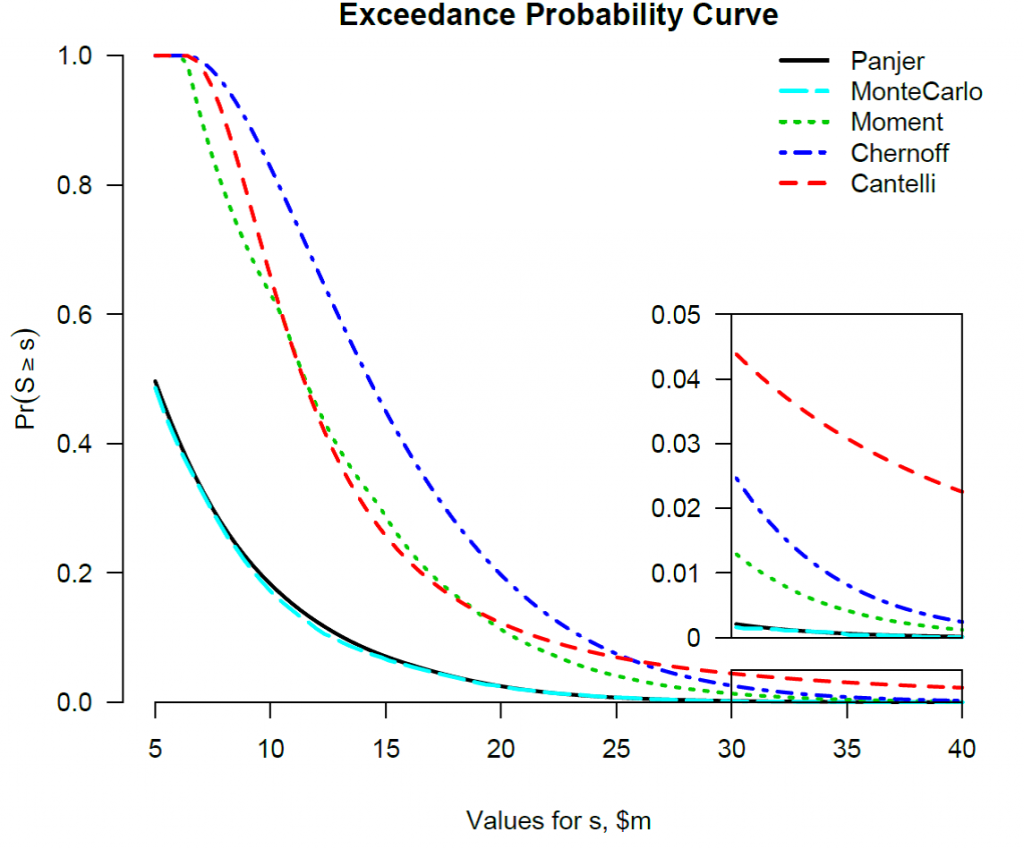

A literature review of global sensitivity analysis techniques was undertaken. This formed the basis for the techniques incorporated in the SAFE software package. In performing the literature review it also became clear that there was a need for additional methods to be developed. Following from this, a novel approach to global sensitivity analysis was produced based on cumulative distribution functions. The method is named PAWN (Pianosi and Wagener, 2015).

REFERENCES

More information is available from the SAFE webpage: http://www.bris.ac.uk/cabot/resources/safe-toolbox/

Pianosi, F. and Wagener, T. (2015) A simple and efficient method for global sensitivity analysis based on cumulative distribution functions. Environmental Modelling & Software 67: 1-11.

Pianosi, F., Sarrazin, F. and Wagener, T. (2015) A Matlab toolbox for Global Sensitivity Analysis. Environmental Modelling & Software 70: 80-85.

Sarrazin, FJ, Pianosi, F & Wagener, T, 2016, ‘Global Sensitivity Analysis of environmental models: Convergence and validation’. Environmental Modelling and Software, vol 79., pp. 135-152

Pianosi, F, Beven, K, Freer, JE, Hall, JW, Rougier, J, Stephenson, DB & Wagener, T, 2016, ‘Sensitivity analysis of environmental models: A systematic review with practical workflow’. Environmental Modelling and Software, vol 79., pp. 214-232