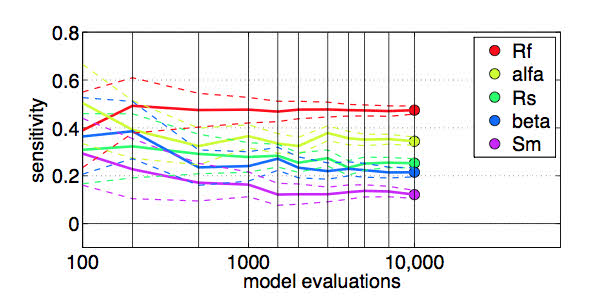

Francesca Pianosi, Fanny Sarrazin and Thorsten Wagener (Engineering) have developed the SAFE Toolbox, which provides a set of functions to perform Global Sensitivity Analysis in Matlab/Octave environment. The tool is now available to use from the Cabot Institute website. Find out more.

Objective and Subjective Probabilities in Natural Hazard Risk Assessment

Dr Stephen Jewson, RMS Ltd, London

Objective and Subjective Probabilities in Risk Assessment

Quantitative risk assessment often uses parametric models, and these models are often based on very little data, making the parameter estimates very uncertain. An example from the field of natural hazard risk assessment would be estimating the frequency distribution of intense hurricanes: there have been 71 intense hurricanes hitting the US in the last 114 years. This means that the mean number of hurricanes can only be estimated to within around 25%, and more subtle aspects of the distribution of the number of hurricanes are estimated even less well.

Bayesian statistics provides a useful set of tools for quantifying parameter uncertainty and propagating it into predictions. Part of the Bayesian methodology is to specify a prior. If there is concrete prior information, the prior can be based on that. If not, then one has to choose between an objective prior or a subjective prior, and it’s important to understand the difference, and think carefully about which is preferable given the purpose of the analysis being done. In this blog, we briefly review the issues.

Objective Priors and Objective Probabilities

Objective priors are an attempt to get as close as possible to the scientific ideal of objectivity, which is usually taken to mean that methods used to derive predictions and risk estimates should be as non-arbitrary and non-personal as possible. Perfect objectivity is unobtainable, but a key concept in science is to get as close as possible. In practice that means that modelling decisions (such as which model to use, which numerical scheme to use, what temporal or spatial resolution to use, which prior to use) should be based on clearly stated and defensible criteria. For objective priors, there are a number of different criteria that can be used. The two most common and widely accepted are (a) that results should be unchanged if we repeat the analysis after a simple transformation of parameters or variables, and (b) that probabilities should match observed frequencies as closely as possible. These two together are often enough to determine a prior. Although there is no complete theory that determines the best or unique objective prior for each situation, there are many results and methods available. If one accepts the philosophy of wanting an objective prior, the main shortcoming of objective priors is that in some cases, especially for complex or new models, determining an objective prior may become a research project in itself. Some of the methods for determining objective priors, for instance, involve group theory, solving non-linear PDEs, or both. Other shortcomings have been discussed by statisticians, but they are mostly related to philosophical issues that are of little relevance to mathematical modellers in general. For simple cases like the normal distribution, predictions based on Objective priors agree exactly with traditional predictive intervals.

Subjective Priors and Subjective Probabilities

Conversely subjective priors are used when the goal is to include subjective, or personal, opinions into a calculation. The personal opinions, in the form of a distribution, are combined with the information from the model and the data to produce what are usually known as subjective, or personal, probabilities. For many mathematical models, writing down a subjective prior can be a lot easier than trying to determine an objective prior.

Subjective probabilities also have various shortcomings, such as: (a) there is no particular reason for anyone to agree with anyone else’s subjective probabilities, and this can lead to a situation where everyone has their own view of a probability or a risk. Whether this is good or bad depends on the purpose of the analysis, but it can be difficult when it is desirable to reach agreement, (b) for subject areas where the data is already well known by everyone in the field, it is likely to be impossible to ensure that a subjective prior is truly independent from the data, as it should be for the method to be strictly valid, (c) for large dimensional problems, it is unrealistic to either have or write down a prior opinion (e.g. on thousands of correlations in a large correlation matrix), (d) some mathematical models have parameters which only have meaning in the context of the model. In these cases the idea of holding any kind of prior view does not make sense and (e) it can be very difficult to write down a distribution that really does reflect one’s beliefs: for instance, when subjective priors are transformed from one coordinate system to another, they often end up having properties that the owner of the prior didn’t intend.

An additional problem with subjective priors that arises in the catastrophe modelling industry is that some insurance regulators require risk estimates to follow the principles of scientific objectivity, as described above, as closely as possible, and will not accept risk estimates in which the modeller is free to adjust the prior and change the results without clear justification.

Priors in Catastrophe Modelling

Based on the considersations given above, we at RMS are keen to quantify parameter uncertainty in our catastrophe models using Objective Bayesian methods wherever possible. Figuring out how to do that is leading us on an interesting journey thru the statistical literature, and round the world, and is even leading to some new mathematical results. Our collaboration with Trevor Sweeting has already led to the derivation of a new result related to the question of how best to predict data from the multivariate normal (all credit to Trevor for that), and we are now working with Richard Chandler and Ken Liang (funded by NERC), on the question of how to derive objective probabilities for the hurricane prediction problem mentioned above.

A curious problem for UK science and industry is that although the concept of objective probability was originally developed here (by the geophysicist Harold Jeffreys) there now seems to be relatively little expertise in the UK in this field: the biggest and most productive groups of researchers in this area are in the US and Spain. Perhaps one objective of PURE might be to try and encourage the development of more local expertise in this field. We’d certainly be interested to make contact with anybody in the UK who has either interest or expertise in objective probabilities, and how to apply them to the myriad mathematical modelling challenges that we face in catastrophe risk modelling.

Spurious Realism

Dr Jonathan rougier

I’d like to share the transcript of a short presentation I gave recently, at a session called All About The Model at the Battle of Ideas organised by the Institute of Ideas at the Barbican on the 19-20 Oct, 2013. It concerns what I term ‘spurious realism’. If you read on I should make it clear that I have no reason to distrust vulcanologists! But I was searching for a strongly visual example.

Here is the transcript, lightly edited.

Models are abstractions. Their purpose is to organise the knowledge and the judgements that we have about the systems that we study. When you are looking at model output, you are looking at a representation of the knowledge and the judgements of the modeller. Now in the domain where the modeller is the expert, his knowledge and his judgements are probably a lot more reliable than yours or mine. So we have no reason, a priori, to distrust the output that we get from carefully considered scientific models constructed by domain experts.

However, the there is one aspect of the modelling process which always causes me some concern, and alarm — what I will call ‘spurious realism’. Suppose that we went to Hollywood and we asked them to construct a simulation of a large volcanic eruption. There is no doubt that what we would get back would be spectacular, and you and I would probably be completely convinced that this was a real volcanic eruption. And the reason, of course, is that Hollywood knows what you and I — non-experts — think that a volcanic eruption should look like. However, it would probably be unconvincing to a vulcanologist, and it wouldn’t be a good platform for making predictions.

Instead, we might have gone to an vulcanologist, with access to a state of the art code for simulating volcanic eruptions, and asked him to construct a simulation of a large volcanic eruption. When he came back with the model output and showed us what he had done we would see immediately that it was not realistic. We would see, for example, that spatially the domain had been pixelated into rectangles. That time didn’t progress smoothly, but was jerky. That the colour scheme was completely false — he might, for example, have used a blue to red to white colour scheme as a heat map. That the eruption itself had very few degrees of freedom. We would be in no doubt at all that what we were seeing was an artifact — this would be completely clear to us just by looking at the model output.

So if a vulcanologist had come to us with a simulation that looked a bit Hollywood — that looked a bit realistic — we ought to suspect that what he has done is cosmetically post-processed the output. But what he’s really done, and this is the concerning issue, is he’s removed from the model output those visual clues that reminded us that we are dealing with an artifact, and which should have cautioned us against putting too much trust in the model output.

So the point I’d like to make about spurious realism is that when you are dealing with scientific models of complex systems, like environmental systems, it’s almost paradoxical, but my view is the more realistic looking is the model output — the more Hollywood if you like — the more cautious we ought to be in trusting the judgement of the modeller who constructed it.

Quantifying the unquantifiable: floods

Keith beven

We had the first joint meeting of the RACER and CREDIBLE consortia in the area of floods at Imperial College last week. Saying something about the frequency and magnitude of floods is difficult, especially when it is a requirement to make estimates for low probability events (Annual Exceedance Probabilities of 0.01 and 0.001 for flood plain planning purposes in the UK, for example) without very long records being available. Even for short term flood forecasting there can be large uncertainties associated with observed rainfall inputs, even more associated with rainfalls forecast into the future, a strongly uncertain nonlinear relationship between the antecedent conditions in a catchment and how much of that rainfall becomes streamflow, and uncertainty in the routing of that streamflow down to areas at risk of flooding. In both flood frequency and flood forecasting applications, there is also the issue of how flood magnitudes might change into the future due to both land management and climate changes.

Nearly all of these sources of uncertainty have both aleatory and epistemic components. As a hydrologist, I am not short of hydrological models – there are far too many in the literature and there are also modelling systems that provide facilities for choosing and combining different types of model components in representing the fast and slow elements of catchment response (e.g. PRMS, FUSE, SuperFLEX). I am, however, short of good ways of representing the uncertainties in the inputs and observed variables with which model outputs might be compared.

Why is this? It is because some of the errors and uncertainties are essentially unquantifiable epistemic errors. In rainfall-runoff modelling we have inputs that are not free of epistemic error being processed through a nonlinear, non-error free model and compared with output observations that are not free from epistemic error. Some of the available data may actually be disinformative in the sense of being physically inconsistent (e.g. more runoff in the stream than observed rainfall, Beven et al., 2011), but in rather arbitrary ways from event to event. Patterns of intense rainfall in flood events are often poorly known, even if radar data are available. Flood discharges that are the variable of interest here are generally not observed but are rather constructed variables of unknown uncertainty (e.g. Beven et al., 2012). In this situation it seems to me that treating a residual model as simply additive and stationary is asking for trouble in prediction (where the epistemic errors might be quite different). There may well be an underlying stochastic process in the long term, but I do not think that is a particularly useful concept in the short term when there appears to be changing residual characteristics both within and between events, particularly for those disinformative events. Most recently, we have been making estimates of two sets of uncertainty bounds in prediction – one treating the next event as if it might be part of the set of informative events, and one as if it might be part of the set of disinformative events (Beven and Smith, 2013). A priori, of course, we do not know.

This is not such an issue in flood forecasting. In that case we are only interested in minimizing bias and uncertainty for a limited lead time into the future and in many cases, when the response time of a catchment is as long as the lead time required, we can make use of data assimilation to correct for disinformation in the inputs and other epistemic sources of error. Even a simple adaptive gain on the model predictions can produce significant benefits in forecasting. It is more of an issue in the flash flood case, where it may be necessary to predict the rainfall inputs ahead of time to get forecasts with an adequate lead time (e.g. Alfieri et al., 2011; Smith et al., 2013). Available methods for predicting rainfalls involve large uncertainties in both locations of heavy rainfall and intensities. This might improve as methods of combining radar data with high resolution atmospheric models evolve, but will also require improved surface parameterisations in getting triggering mechanisms right.

The real underlying issue here comes back to whether epistemic errors can be treated as statistically stationary or whether, given the short periods of data that are often available, they result in model residual characteristics that are non-stationary. I think that if they are treated as stationary (particularly within a Gaussian information measure framework) it leads to gross overconfidence in inference (e.g. Beven, 2012; Beven and Smith, 2013). That is one reason why I have for a long time explored the use of more relaxed likelihood measures and limits of acceptability within the GLUE methodology (e.g. Beven, 2009). However, that does not change the fact that the only guide we have to future errors is what we have already seen in the calibration/conditioning data. There is still the possibility of surprise in future predictions. One of the challenges of the unquantifiable uncertainty issue in CREDIBLE/RACER research is to find ways of protecting against future surprise.

References

Alfieri L., Smith P.J., Thielen-del Pozo J., and Beven K.J., 2011, A staggered approach to flash flood forecasting – case study in the Cevennes Region, Adv. Geosci. 29, 13-20.

Beven, K., Smith, P. J., and Wood, A., 2011, On the colour and spin of epistemic error (and what we might do about it), Hydrol. Earth Syst. Sci., 15, 3123-3133, doi: 10.5194/hess-15-3123-2011.

Beven, K. J., and Smith, P. J., 2013, Concepts of Information Content and Likelihood in Parameter Calibration for Hydrological Simulation Models, ASCE J. Hydrol. Eng., in press.

Beven, K J, Buytaert, W and Smith, L. A., 2012, On virtual observatories and modeled realities (or why discharge must be treated as a virtual variable), Hydrological Processes, DOI: 10.1002/hyp.9261

Beven, K J, 2012, So how much of your error is epistemic? Lessons from Japan and Italy. Hydrological Processes,DOI: 10.1002/hyp.9648, in press.

Smith, P J, L. Panziera and K. J. Beven, 2013, Forecasting flash floods using Data Based Mechanistic models and NORA radar rainfall forecasts, Hydrological Sciences Journal, in press

PlumeRise – modelling the interaction of volcanic plumes and meteorology

Dr. Mark Woodhouse (Postdoctoral Research Assistant, School of Mathematics, University of Bristol)

Explosive volcanic eruptions, such as eruptions of Eyjafjallajökull 2010, Grimsvötn 2011 and Puyehue Cordón-Caulle 2011, inject huge quantities of ash high into the atmosphere that can be spread over large distances. The 2010 eruption of Eyjafjallajökull, Iceland, demonstrated the vulnerability of European and transatlantic airspace to volcanic ash in the atmosphere. Airspace management during eruptions relies on forecasting the spreading of ash.

A crucial requirement for forecasting ash dispersal is the rate at which material is delivered from the volcano to the atmosphere, a quantity known as the source mass flux. It is currently not possible to measure the source mass flux directly, so an estimate is made by exploiting a relationship between the source mass flux and the height of the plume which is obtained from the fundamental dynamics of buoyant plumes and calibrated using a dataset of historical eruptions. However, meteorology is not included in the calibrated scaling relationship. Our recent study, published in the Journal of Geophysical Research, shows that meteorology, in particular wind conditions, at the time of the eruption has large effect on the rise of the plume. Neglecting the wind can lead to under predictions of the source mass flux by more than a factor of 10.

Our model, PlumeRise, allows detailed meteorological data to be included in the calculation of the plume dynamics. By applying PlumeRise to the record of plume height observations during the Eyjafjallajökull eruption we reconstruct the behavior of the volcano during April 2010, when the disruption to air traffic was greatest. Our results show the source mass flux at Eyjafjallajökull was up to 30 times higher than estimated using the calibrated scaling relationship.

Underestimates of the source mass flux by such a large amount could lead to unreliable forecasts of ash distribution. This could have extremely serious consequences for the ash hazard to aviation. In order to allow our model to be used during future eruptions, we have developed the PlumeRise web-tool.

PlumeRise (www.plumerise.bris.ac.uk) is a free-to-use tool that performs calculations using our model of volcanic plumes. Users can input meteorological observations, or use idealized atmospheric profiles. Volcanic source conditions can be specified and the resulting plume height determined, or an inversion calculation can be performed where the source conditions are varied to match the plume height to an observation. Multiple runs can be performed to allow comparison of different parameter sets.

The PlumeRise model was developed at the University of Bristol by Mark Woodhouse, Andrew Hogg, Jeremy Phillips and Steve Sparks. The PlumeRise web-tool was developed by Chris Johnson (University of Bristol). The tool has been tested by several of the Volcanic Ash Advisory Centres (VAACs). It is also being used by academic institutions around the world. Our research is part of the VANAHEIM project. The development of the PlumeRise web-tool was supported by the University of Bristol’s Enterprise and Development Fund.

Variation and Uncertainty in European Windstorm Footprints

David stephenson and laura dawkins

Windstorms, or extra-tropical cyclones with intense surface wind speeds, are a major source of risk for European people. Windstorms can cause aggregate insured losses comparable to that of US landfall hurricanes: for example, the cluster of three storms Anatol, Lothar and Martin in December 1999 led to insured losses of US$11bn. Windstorms cause economic damage of US$2.5 billion per year, and insurance losses of US$1.8 billion per year (1990-1998). They rank as the second highest cause of global natural catastrophe insurance loss after U.S. hurricanes.

The loss due to European windstorms depends not only on the frequency and intensity but the spatial extent of the resulting footprint. The footprint of a storm is defined as the maximum 3 second gust at each grid point in the model domain over a 72 hour window centred on the time at which the maximum wind speed over land along the track is achieved during the storm.

This project aims at building and testing methodologies that can be used to estimate the risk of intense windstorm events by understanding the variation and uncertainty in windstorm footprints and the mechanisms which cause these variations and uncertainties (e.g. Large scale atmospheric circulation systems and model error).

A common assumption in catastrophe models is that the spatial extent of a windstorm footprint is constant. However, it is observed that windstorms can have very different spatial extents. This is evident from the image below which displays the footprints and exceedance footprints (threshold = 25m/s over land and 30 m/s over sea, chosen to ensure relevance to wind damage risk) for 3 major insurance loss windstorm events.

The model used to generate the windstorm footprints is based on the Met Office North Atlantic-European operational Numerical Weather Prediction (NWP) model (UM vn 7.4, parallel suite PS24). The model is run at a resolution of 25km.

Currently a historical eXtreme Wind Storms (XWS) catalogue is being developed by the Met Office, the University of Exeter and the University of Reading due for release in August 2013. The catalogue will be freely available for commercial and academic use and will contain information on the storm tracks and footprints of 50 extreme windstorms. The catalogue aims to facilitate research into storm characteristics and the influence of atmospheric variability and climate change on European windstorms and will be a wonderful resource for windstorm work within both CREDIBLE and RACER.

My role in developing the catalogue has been to derive an objective method for selecting severe and high insurance loss storms to be included within the catalogue. To achieve this the key indicators of high insurance loss storms were investigated and found to be the maximum wind speed over land and the exceedance area over land (threshold=25m/s). This result is illustrated in the plot below where 23 famous high insurance loss storms are shown in red. This finding reiterates the importance of varying the spatial extent of windstorms within catastrophe models.

Later in the CREDIBLE project, work will be carried out at the UK Met Office using ensemble prediction methods to allow for the quantification of forecast uncertainty of windstorm footprints on different time and length scales. The aim is to exploit this information within the larger and more complete framework for uncertainty estimation being developed within the CREDIBLE consortium project. A further aim is to use the windstorm footprint uncertainty in a risk assessment tool as part of the Met Office’s Hazard Impact Model.

Uncertainty in Weather Forecasting

Ken MyLne, Met Office

Uncertainty is an inherent part of weather forecasting – the very word forecast, coined by Admiral Fitzroy who founded the Met Office over 150 years ago, differentiates it from a prediction by implication of uncertainty. Chaos theory – the idea of sensitivity of the forecast to small errors in initial conditions within non-linear systems – originated in early experiments in numerical modelling in meteorology.

Modern weather forecasting, using highly complex and non-linear models of atmospheric circulation and physics, uses a technique called ensemble forecasting to explicitly address uncertainty in chaotic systems. Ensemble forecasting is similar in concept to Monte-Carlo modelling, but with many fewer model runs due to the high computational cost of forecast models. Small perturbations are added to the analysis of the current state of the atmosphere, and multiple forecasts are run forward from the perturbed initial states. Small perturbations to aspects of model physics are often also employed to address uncertainty due to approximations in model representations of physics and unresolved sub-grid scale motions. The ensemble then provides a set of typically between 20 and 50 separate forecast solutions, each a self-consistent forecast scenario. The set may be used to estimate the likelihood of different forecast outcomes and to assess risks associated with different scenarios, particularly with potentially high-impact weather – which is where PURE comes in.

Ensemble forecasting has traditionally been used for medium-range forecasting of several days ahead, where the synoptic scale evolution of large scale pressure systems is highly chaotic, as well as for longer range prediction. However the recent advent of models with grid-lengths of 1-4km which can partially resolve the highly non-linear circulations within convective storms such as thunderstorms means that ensembles are now an essential feature of the forecast within the next 12-24 hours. Figure 1 shows an example of a forecast from a 2.2km convection permitting ensemble of rain falling within a particular hour exceeding 0.2mm. Here a post-processing method is also used to account for the limited sampling of the ensemble and smooth the probabilities by allowing also for the possibility that the rain seen at one grid-point in the model could equally likely be falling at adjacent grid-points within around 30km.

Figure 1: Example of forecast of heavy rainfall (exceeding 16mm/hr) from the Met Office’s 2.2km MOGREPS-UK ensemble post-processed with “neighbourhood” processing to account for under-sampling by the ensemble.

Ensemble forecasts provide a powerful tool for estimating the likelihood of extreme weather events, but to properly understand the risk requires this to be translated into the probability of a significant impact on vulnerable assets. This is a significant challenge because each vulnerable asset has its own impact mechanism which must be modelled. Furthermore the impact is more difficult to measure objectively and therefore the forecasts are difficult to validate or calibrate in any objective manner. This work to develop a Hazard Impact Model (HIM) is being attempted collaboratively within the Natural Hazards Partnership, a collaboration of several UK agencies supported by the Cabinet Office, with the aim of providing guidance to the government on societal risk from natural hazards. Two of the NERC PURE PhD studentships sponsored by the Met Office aim to contribute to the HIM

:• At Exeter University within the CREDIBLE consortium, looking at impacts of severe windstorms, and

• At Reading University within the RACER consortium addressing impacts of cold temperature outbreaks.

One of the biggest challenges is the communication of uncertainty to the users of weather forecasts. Traditionally forecasters have always expressed uncertainties with subjective phrases such as “rain at times, heavy in places in the north, perhaps leading to localised flooding”. With increasingly automated forecasts for very specific locations there is a need to express uncertainty through symbols or graphics and in ways that people understand, and believe that they understand. A number of experiments over recent years have demonstrated that people from a range of social and educational backgrounds can make better decisions when presented with more complex probabilistic forecast information than if they are given a simple deterministic (or categorical) forecast. Despite this, the message that frequently comes back from customers and service managers is “people don’t understand probabilities” and “users just need to make a decision”, so users appear not to believe that they can understand the uncertainty information or know how to respond to it. As scientists we may be able to demonstrate that providing uncertainty represents a more complete picture of the forecast, and allows for the possibility of better decision-making (assuming of course that the uncertainty information has skill), but convincing users of this, from government civil protection agencies and commercial business users to the general public, remains a significant challenge. We hope that the PURE project will make a significant contribution to breaking down these barriers and finding improved ways of communicating the complete forecast picture.

STOP PRESS

On the day of posting, we have just received news of the terrible tornado near Oklahoma city. Tornadoes are a great example of a low-probability high-impact event, especially when you forecast the probability of an individual property or person being affected. But in general the people of Oklahoma know well how to respond to watches (issued hours ahead with low probabilities over areas at risk) and warnings (issued minutes ahead with higher probability). Sadly on this occasion the storm was so severe that their normal protection did not protect everyone. We are currently experimenting, in collaboration with the US National Severe Storms Laboratory, with new ensemble tools and high resolution models for forecasting tornado risk. Very early indications are encouraging.

Lahars – Floods of Volcanic Mud

jeremy phillips

In this installment, I will introduce some of the hazards and research questions concerning the strange-sounding Lahar, or volcanic mudflow, if your Indonesian is a little rusty. Firstly some things about me: I am an academic Volcanologist with interests in developing models of volcanic processes that can be used to assess their hazard in practical situations, so I have broad interests in uncertainty in hazard modeling. Secondly, this is my first blog post ever (there’s probably some term for this), so here goes…

Lahars are a mixture of volcanic ash, other rocks and soils, and water, which have a consistency similar to wet concrete. They are formed in two main ways: when a volcano erupts hot ash onto its snow covered or glacicated flanks and surroundings, or when intense rainfall remobilizes volcanic ash deposits from previous eruptions or ongoing activity. The resulting mixture of ash and water tends to flow in existing valleys, that are typically steep-sided higher on the volcano and feed into flatter river systems. Like other hazardous mass flows, they are erosive on steep slopes (this is where the ‘other rocks and soils’ come from) and form deposits on shallow slopes, but in lahars the addition of rock and soils by erosion is extreme – the volume of the Lahar can increase by up to a factor of ten due to this effect. The flow transports a high concentration of solids, providing the ability for large boulders to be transported in the flow, meaning that building damage can be considerable, when the flow overtops river channels in habited regions. They can also be extremely fast moving – have a look at http://www.youtube.com/watch?v=kznwnpNTB6k for three amazing clips of recent lahars in Japan.

Despite their initiation in localized regions, Lahars can be very large and destructive. A key driver in the development of volcanic hazards communication videos in the 1980s was the Lahar resulting from the eruption of Nevada Del Ruiz in Colombia in 1985, which destroyed the town of Armero 74 km away with the estimated loss of 23,500 lives. Lahar activity is common in countries with high snow-covered volcanoes such as Japan, those that include the Andes, countries with active volcanoes and seasonal rainfall including Indonesia and the central Americas, or simply where there has been a large recent explosive eruption, such as areas of the Western US affected by the 1980 Mt St Helens eruption. Lahars are closely related to mudflows and debris flows, and these hazards are globally widely-distributed.

Update on ‘earthquake hazard/risk’ activities in CREDIBLE

katsu goda

On 20th April, 2013, another dreadful earthquake disaster occurred in Sichuan, China. The earthquake was a moment magnitude (Mw) 6.6 event, originated from the Longmenshan fault (http://earthquake.usgs.gov/earthquakes/eventpage/usb000gcdd#summary). As of April 27, the number of fatalities (including those missing) was 217. Besides, more than 13,000 were injured and more than 2 million were affected by the earthquake. The earthquake source region was the same as the catastrophic 12th May 2008 Mw7.9 Wenchuan earthquake (total number of deaths exceeded 69,000).

From an earthquake risk management perspective, this tragic event reminds us of two important facts. Firstly, earthquake damage is the consequence from implemented seismic protection and experienced seismic hazard. In simple terms, damage is caused when seismic demand exceeds seismic capacity of a structure. Plenty of reports and news have indicated that seismic design provisions for buildings and infrastructure and their implementation in the Sichuan province were not sufficient even after the 2008 earthquake. Newly constructed buildings were severely damaged or collapsed during the recent event. As adequate techniques and devices to mitigate seismic damage of physical structures (e.g. ductile connections, dampers, isolation, etc.) are available, the damage and loss could have been reduced significantly. It is also important to recognise that recovery and reconstruction from disasters provide us with opportunities to fix the problems fundamentally – we should take advantages of those events in a proactive manner.

Secondly, major earthquakes occur repeatedly in the same seismic region, and triggered events can cause significant damage. The 2013 event may not be directly triggered by the 2008 event. Nonetheless, it is suspected that the previous event have had some influence on the occurrence of the recent one through complex stress interaction within the Longmenshan fault system (this is possible in light of the triggering of the 1999 Mw7.1 Hector Mine earthquake due to the 1992 Mw7.3 Landers earthquake in California; Felzer et al., 2002). The destructiveness of repeated earthquakes has also been highlighted in recent earthquake disasters, particularly in the 2010-2011 Canterbury sequences (Shcherbakov et al., 2012). The mainshock was the 4thSeptember 2010 Mw7.1 Darfield earthquake, occurred about 40 km from the city centre of Christchurch. Following this event, aftershocks continued and migrated toward the city. On 22nd February, 2011, a major Mw6.3 Christchurch earthquake struck very near to the downtown Christchurch at a shallow depth, causing severe damage to buildings and infrastructure.

The current probabilistic seismic hazard and risk analysis framework does not address aftershock impact in the assessment. It is mainly focused upon the effects due to mainshocks, which are modelled by stationary Poisson processes (or some renewal processes). Clearly, in a post-mainshock situation, aftershock generation processes are no longer time-invariant. As part of PURE-CREDIBLE projects, I have been working on this issue by analysing world-wide aftershock sequences to develop simple statistical models, such as the Gutenberg-Richter law, modified Omori law, and Bath’s law (Shcherbakov et al., 2013). The main aim is to evaluate the fitness of the three seismological models to actual mainshock-aftershocks data and the uncertainty (variability) of the model parameters. These models facilitate the quantification of aftershock hazards, following a major mainshock, and the simulation of artificial mainshock-aftershocks sequences. Building upon this, I have further investigated how to assess the nonlinear seismic damage potential on structures due to aftershocks, in addition to mainshocks (Goda and Taylor, 2012; Goda and Salami, 2013). Extensive nonlinear dynamic simulations of structural behaviour subjected to real mainshock-aftershocks sequences were conducted to establish empirical benchmark for the aftershock effects on structures. Moreover, an artificial procedure for generating mainshock-aftershocks sequences was devised, and the equivalence of the nonlinear damage potential due to real and artificial sequences was verified. With the newly developed seismological and engineering tools, one can assess the aftershock hazard and risk quantitatively; these tools can be used as a starting model to evaluate the aftershock impact in low-to-moderate seismic regions where real mainshock-aftershocks sequences are not available. Importantly, the extended seismic hazard and risk analysis methodology takes into account triggered/induced hazards more comprehensively (in this regard, many improvements should be made by including geotechnical hazards and tsunamis), and envisages a dynamic, rather than quasi-static, risk assessment framework. It is noteworthy that major challenges in characterising aftershock generation remain. The above developments are focused upon temporal features of aftershock occurrence (e.g. decay of seismic activities since a mainshock), while spatial dependence of aftershocks is not explicitly considered. A workable technique is an ETAS (Epidemic Type Aftershock Sequence) model (Ogata and Zhang, 2006); it allows ‘individual aftershocks generate their own aftershock sequences’ and ‘aftershock occurrence rates vary in space’. Prof. Ian Main in the PURE-RACER consortium is actively working on this issue.

Another notable development/news in the field of engineering seismology and earthquake engineering is the (near) completion of a so-called NGA-WEST2 project (see http://peer.berkeley.edu/ngawest2/ for general information) on ground motion prediction models for global shallow crustal earthquakes. Various research outcomes from the NGA-WEST2 project were presented during the 2013 Seismological Society of America annual meeting at Salt Lake City. A new set of ground motion models will replace the 2008 models eventually. The new suite improves modelling of directivity and directionality (e.g. near-fault motions), extension of the applicable range to a lower magnitude range (i.e. magnitude scaling), model development for vertical motions, quantification of epistemic uncertainty, and evaluation of soil amplification factors (including non-linear site responses). All these refinements are important and relevant for seismic hazard assessment in Europe and the U.K. Particularly, more robust magnitude scaling of the predicted ground motion will enhance the accuracy of the seismic hazard assessment (note: an on-going NGA-EAST project will have greater influence on the seismic hazard and risk assessment in the U.K.).

Lastly, I would like to draw attention to a publication of ‘Handbook of seismic risk analysis and management of civil infrastructure systems’ (Woodhead Publishing, Cambridge, U.K.;http://www.woodheadpublishing.com/en/book.aspx?bookID=2497), which I have co-edited. The contributors of the handbook are international (U.K., Italy, Germany, Greece, Turkey, Canada, U.S.A., Japan, Hong Kong, New Zealand, and Colombia), having diverse professional backgrounds and interests. The handbook covers a wide range of topics related to earthquake hazard assessment, seismic risk analysis, and risk management for civil infrastructure. One of the main focuses is to provide the state-of-the-art overview of seismic risk analysis and uncertainty quantification. I hope that this is an invaluable guide for professionals requiring understanding of the impact of earthquakes on buildings and lifelines, and the seismic risk assessment and management of buildings, bridges and transportation.

References

Felzer, K.R., Becker, T.W., Abercrombie, R.E., Ekstrom, G., Rice, J.R. (2002). Triggering of the 1999MW 7.1 Hector Mine earthquake by aftershocks of the 1992 MW 7.3 Landers earthquake. Geophys. Res.: Solid Earth, 107, ESE 6-1-ESE 6-13.

Goda, K., Taylor, C.A. (2012). Effects of aftershocks on peak ductility demand due to strong ground motion records from shallow crustal earthquakes. Earthquake Eng. Struct. Dyn., 41, 2311-2330.

Goda, K., Salami, M.R. (2013). Cloud and incremental dynamic analyses for inelastic seismic demand estimation subjected to mainshock-aftershock sequences. Bull. Earthquake Eng. (in review).

Ogata, Y., Zhuang, J. (2006). Space–time ETAS models and an improved extension. Tectonophysics, 413, 13-23.

Shcherbakov, R., Nguyen, M., Quigley, M. (2012). Statistical analysis of the 2010 Mw 7.1 Christchurch, New Zealand, earthquake aftershock sequence. New Zealand J. Geol. Geophys., 55, 305-311.

Shcherbakov, R., Goda, K., Ivanian, A., Atkinson, G.M. (2013). Aftershock statistics of major subduction earthquakes. Bull. Seismol. Soc. Am. (in review).